Google’s general views, designed to give quick answers to search queries, according to reports, spit “hallucinations” of false information and emphasizes publishers away from users of traditional links.

The great technological giant, who disembarked in hot water last year after launching a “ awakened ” Ai tool that generated images of women’s papes and black Vikings, has made criticism of providing false and sometimes dangerous advice in his summaries, according to The Times of London.

In one case, the general views of the AI advised to add glue to the pizza sauce to help the cheese stick better, the dam reported.

In another, he described a false phrase: “You can’t lick a fabric twice,” as a legitimate language.

Hallucinations, as computer scientists call them, are aggravated by the AI tool that decreases the visibility of sources of good reputation.

Instead of directing users directly to websites, summarizes information on search results and presents their own response generated by AI along with some links.

Laurence O’Toole, a founder of the Analytic Authoritas firm, studied the impact of the tool and found that clicking rates on publisher websites fall 40% -60% when AI general views are displayed.

“Although these were generally for queries that people do not usually do, he emphasized some specific areas we needed to improve,” said Liz Reid, Google’s search chief, told the Times in response to the glue incident in the pizza.

The post has sought Google comments.

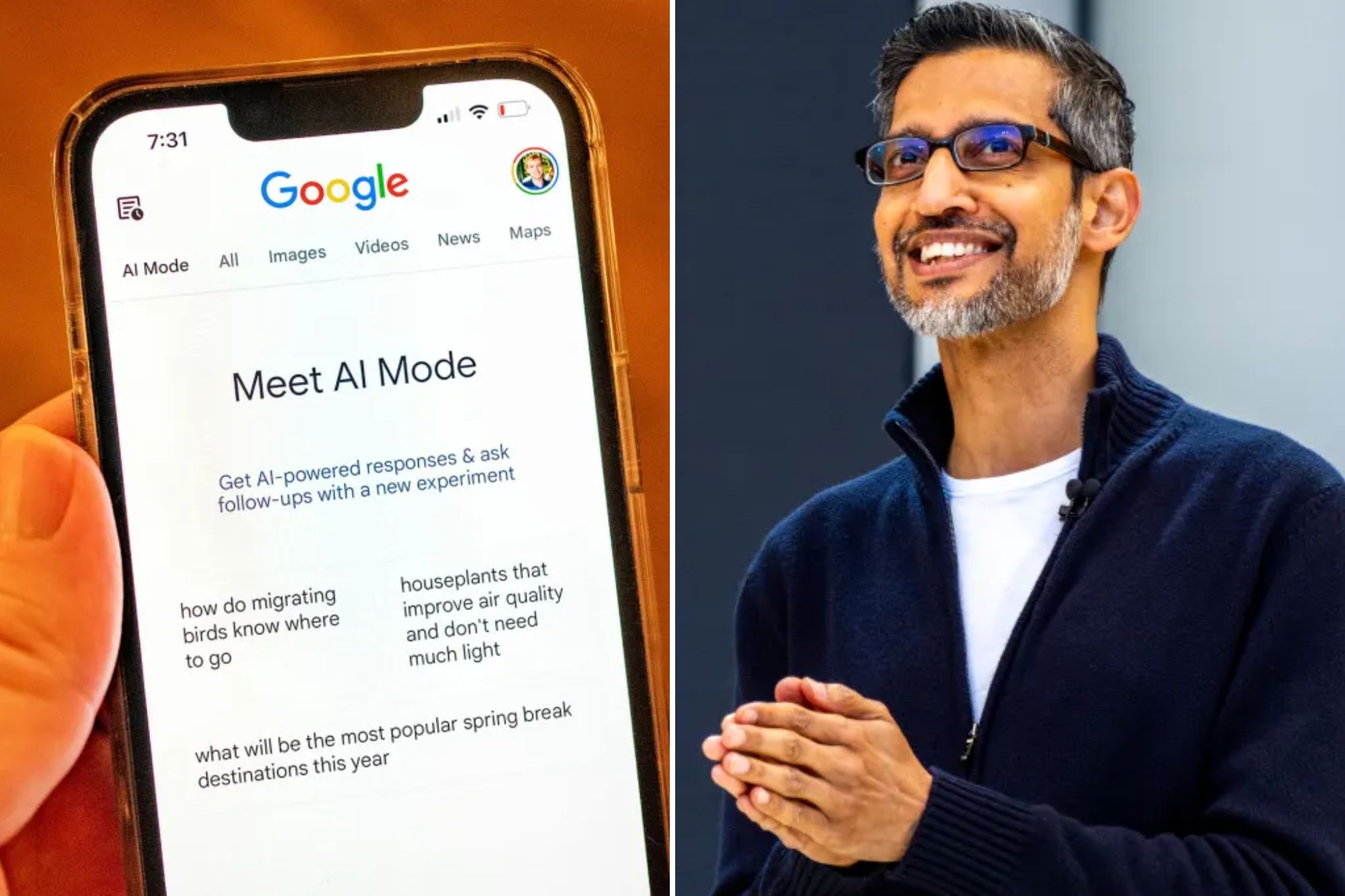

AI general views were introduced last summer and was promoted by the Google Gemini language model, a system similar to Openai’s Chatgpt.

Despite public concerns, Google’s CEO, Sundar Pichai, defended the tool in an interview with The Virgin, saying that it helps users to discover a wide range of information sources.

“During the last year, we are clear that the breadth of the area we are sending is increasing … We definitely send traffic to a wider range of sources and publishers,” he said.

Google seems to reduce its own hallucination rate.

When a journalist searched for information on how often his AI is mistaken, the AI response demanded hallucination rates between 0.7% and 1.3%.

However, the data from the AI control platform that embraced the face indicated that the actual rate of the most recent Gemini model is 1.8%.

Google AI models also seem to offer pre -programmed defenses of their own behavior.

In response to whether the AI ”clothes” work of art, the tool said “no art clothes in the traditional sense”.

When asked if people should be afraid of the AI, the tool went through some common concerns before concluding that “fear could be exceeded.”

Some experts are concerned that as generative AI systems become more complex, they are also more likely to be more errors, and even their creators cannot completely explain why.

CONCERNATIONS ON HABLSAGE MAKE ABOUT Google.

Openai has recently admitted that their newest models, known as O3 and O4-Mini, are further vanished more often than previous versions.

Internal tests showed information that O3 constituted information in 33% of cases, while O4-Mini did 48% of the time, especially when they answered questions about real people.

#Googles #amazing #spreading #dangerous #information #including #suggestion #add #glue #pizza #sauce

Image Source : nypost.com